Reliable AI for accountable competitiveness

How does one educate machines and robots, principally a bunch of circuitry and binary code, to behave ethically? Synthetic intelligence isn’t evil, however how its creators wield and use and apply AI, might make it appear so.

Two our bodies to date, Singapore’s IMDA and the European Fee appear to imagine that like the whole lot else that’s constructed into machines and robots, ethics too might be programmed into robots. Extra particularly, ethical-guided settings might be inbuilt make AI behave ethically.

In accordance with an ethics guideline draft authored by the European Fee’s AI high-level professional group (HLEG), reliable AI has two parts.

Firstly, it ought to respect basic rights, relevant regulation and core rules and values, making certain an “ethical purpose”. Secondly, it needs to be “technically robust” and dependable since, even with good intentions, a scarcity of technological mastery may cause unintentional hurt.

There are actual life examples of this already – autonomous automobiles mowing down harmless pedestrians.

Making certain moral goal

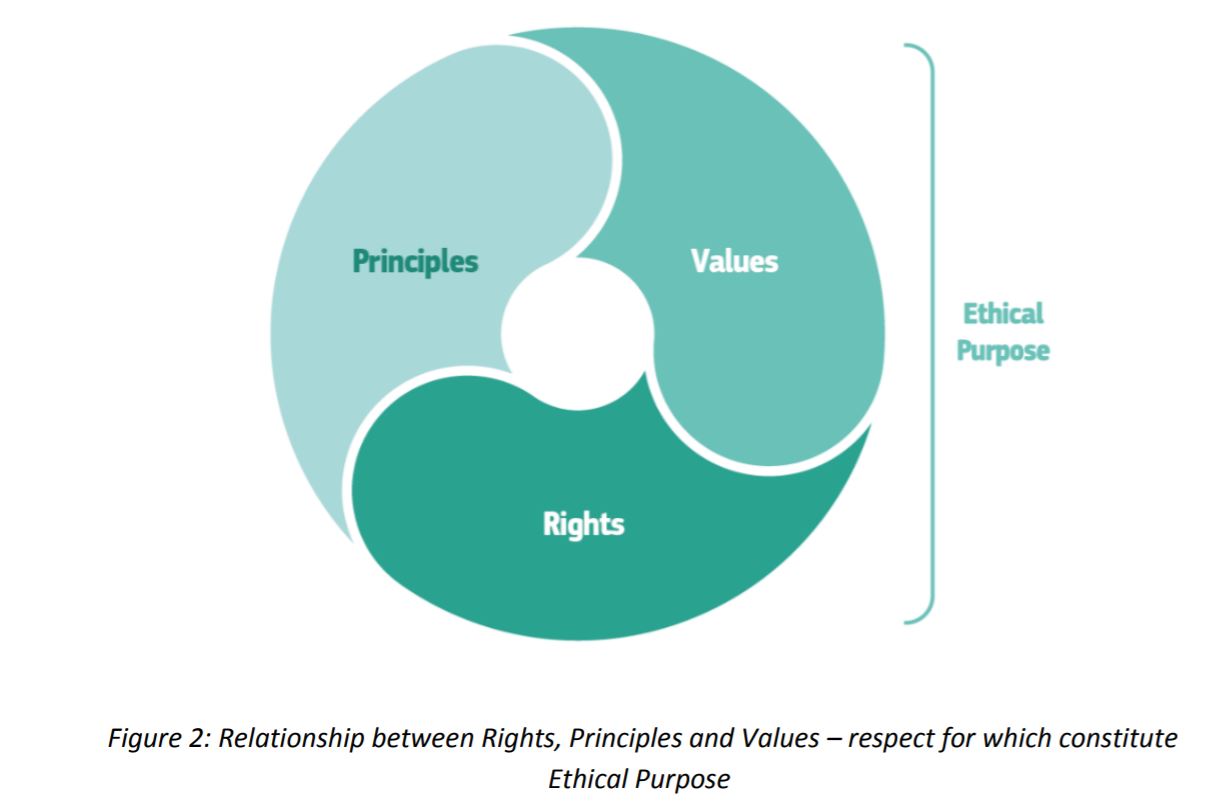

Ethics rules are summary, however HLEG’s draft tries to operationalise ethics for synthetic intelligence, by referring to the elemental rights dedication of the EU Treaties and Constitution of Basic Rights, in addition to different authorized devices (European Social Constitution) and legislative acts (Normal Information Safety Regulation).

All these serve the aim of figuring out moral rules and specify how concrete moral values might be operationalised.

Basic rights present the bedrock for the formulation of moral rules. These rules are summary high-level norms that builders, deployers, customers and regulators ought to observe so as to uphold the aim of human-centric and Reliable AI. Values, in flip, present extra concrete steerage on the best way to uphold moral rules, whereas additionally underpinning basic rights.

On the finish of the day, we wish to leverage synthetic intelligence, however not cripple it with restrictions that moral values might place on it. The thought is to allow competitiveness, however accountable competitiveness.

On the finish of the day, we wish to leverage synthetic intelligence, however not cripple it with restrictions that moral values might place on it. The thought is to allow competitiveness, however accountable competitiveness.

The ten necessities for Reliable AI listed beneath, have been derived from the rights, rules and values of Chapter I. Whereas they’re all equally essential, in several software domains and industries, the particular context must be taken under consideration for additional dealing with thereof.

- Accountability

- Information Governance

- Design for all

- Governance of AI Autonomy (Human oversight)

- Non-Discrimination

- Respect for (& Enhancement of) Human Autonomy

- Respect for Privateness

- Robustness

- Security

- Transparency

Through the course of the 37-page draft being performed out, a listing of vital issues had been additionally broached.

Vital issues raised by AI the place conclusion couldn’t be arrived at

- Identification with out consent.

- Covert AI techniques – people should have the ability to request and validate they truth they’re interacting with an AI identification.

- Normative and mass citizen scoring with out consent – citizen scoring is utilized in faculty techniques, and for driver licenses for instance. A completely clear process needs to be accessible to residents, in restricted social domains no less than, so they might make knowledgeable choices to opt-out or not, the place doable.

- Deadly autonomous weapon techniques (LAWS) – LAWS allow vital capabilities of choosing and attacking particular person targets. Human management probably might be fully relinquished and dangers of malfunction not addressed.

- Longer-term issues – Future AI use, at finest remains to be speculative in nature and requires having to extrapolate into the longer term.