AI that has ethical purpose and technical robustness

Artificial intelligence (AI) has appeared in the news for the good it does more than the bad impact it has on our lives. Its prominence now can also be attributed to the availability of vast amounts of data, more powerful computing and breakthroughs in AI techniques like machine learning.

So, considering how pervasive the use of AI is in areas like autonomous vehicles, healthcare, home/service electronics, education and cybersecurity, certain groups have decided to hunker down and set down some ethics guidelines for AI that we can trust.

First in Singapore, an advisory council had robust discussion in the ethical use of AI and data. The statement released by the Info-communications Media Development Authority (IMDA), said, “A trusted AI ecosystem is critical for industries to effectively adopt innovative emerging technologies and business models, while ensuring strong consumer confidence, protection and participation.

“In the area of data and data-driven technologies like AI, the development of responsible practices must be informed by diverse views and a global perspective.”

Not long after, the European Commission’s high-level expert group (AI HLEG) released a draft ethics guidelines.

Trustworthy AI

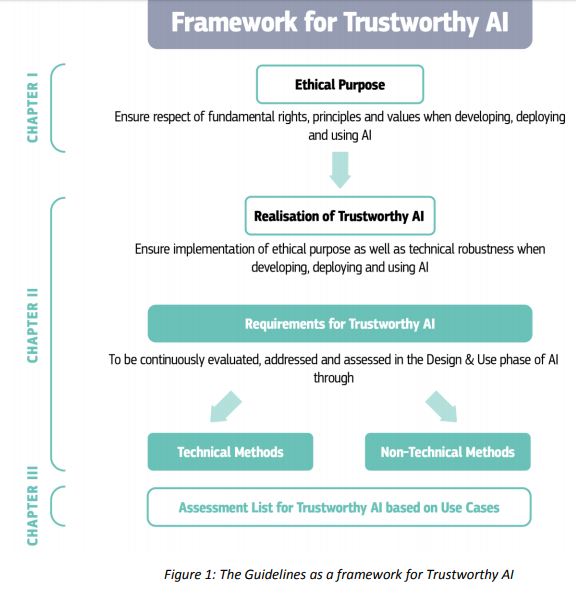

According to this draft authored by the AI HLEG, trustworthy AI has two components. Firstly, it should respect fundamental rights, applicable regulation and core principles and values, ensuring an “ethical purpose” and secondly, it should be “technically robust” and reliable since, even with good intentions, a lack of technological mastery can cause unintentional harm.

This draft also claims to offer more than just a list of core values and principles for AI, but rather guidance on concrete implementation and operationalisation of AI systems.

Stakeholders or parties that the Trustworthy AI guidance addresses, have been identified as relevant stakeholders that develop, deploy or use AI – companies, organisations, researchers, public services, institutions, individuals or other entities, and even governments.

Stakeholders or parties that the Trustworthy AI guidance addresses, have been identified as relevant stakeholders that develop, deploy or use AI – companies, organisations, researchers, public services, institutions, individuals or other entities, and even governments.

How compulsory is this?

A mechanism will be put in place that enables all stakeholders to formally endorse and sign up to the Guidelines on a voluntary basis

The AI HLEG emphasises that “these Guidelines are not meant to stifle AI innovation in Europe, but instead aim to use ethics as inspiration to develop a unique brand of AI, one that aims at protecting and benefiting both individuals and the common good.”